An Engineer's Guide to Data Contracts - Pt. 2

And the Power of the Transactional Outbox

Note from Chad: 👋 Hi folks, thanks for reading my newsletter! My name is Chad Sanderson and I write about data products, data contracts, data modeling, and the future of data engineering and data architecture. Today’s article is part 2 in a 3-part series on the implementation of data contracts, once again written by my friend and co-worker Adrian Kreuziger. This time we’re focused on application events. Subscribe below to ensure you get part 3 on semantic enforcement in your inbox, and join the Data Quality Camp Slack community for practitioner-driven advice on contract implementation and managing data quality at scale.

A Primer on Contracts, Events, and Transactional Outbox

In the last post, we wrote about implementing entity-based data contracts using a process called Change Data Capture (CDC). In the follow-up post, we’ll talk about how to create and enforce data contracts for application events using the Transactional Outbox pattern. Conceptually, contracts work the same way for both entity and application events with the main difference coming in the enforcement and fulfillment steps. Before we dive into the implementation details, let’s quickly rehash the two event types of events, why we need both, and how they work together.

Entity vs Application Events

Entity events refer to a change in the state of a semantic entity. Entities are nouns that represent a company’s core business objects. In the freight world, this might include a shipper, shipment, auction, or RFP. Changes occur when the properties of each entity are updated, such as whether or not a shipment is on time.

Application events refer to publishing immutable "real world" events from application code, and the properties that make up the associated snapshot of the world when each event occurs. Events are verbs that map to the behavior of an entity. As an example, we might emit application events when a shipment is cancelled, a contract is signed, or a facility has closed.

Why do we need both?

The answer is pretty simple: They’re both useful for different things. Because Entity events are powered by CDC which operates at the data store level, they’re guaranteed to capture all state changes. Entity events can be used to view the current state of a business object or provide an audit log to track how the object has been modified over time. However, while entity events can tell you what changed, they don’t tell you why something changed.

This is where application events come into play. Application events are produced directly from application code and represent a real-world business action. These events contain metadata that provides context around why or how the event occurred. Application events have many uses, such as computing metrics (how many times did X event occur per day?), machine learning features (the last time user did X), powering async workflows (do something every time X happens), operational debugging (what did the customer input that triggered X?), and so on.

It can be tempting to live with only one event type. If you’ve already implemented entity events you might try to infer the real-world occurrences from entity updates, but this leads to complex and brittle SQL in the Data Warehouse that reverse engineers what happened in the past based on the current state. Conversely, teams may want to implement application events alone in order to derive the current state of an entity from its event log (a pattern known as Event Sourcing). In reality, correctly implementing event sourcing adds significant engineering complexity that’s rarely worth the effort. Indeed, many/most foundational use cases for event data begin with entity events as a foundation and expand to application events over time.

Entity and Application events complement each other, and when used together allow teams to capture the data they need to run their business end-to-end.

Business Critical Data vs. Directional Data

Depending on the use case, events may have more or less need for ‘correctness.’ Correctness here refers to the link between entity data recorded in our transactional database and what is collected from the application. Data is Business Critical when being directional correctness is simply not good enough. Financial reporting pipelines, sensitive ML models, or embedded analytic served to the customer are all examples. Directional Data refers to use cases where directionality is not a net negative. For example, application events are generally not necessary when tracking parts of the user journey. You wouldn’t fire an application event for hovering over a tooltip or even navigating in your website or app. However, once the user begins interacting with core business entities as part of the customer experience it becomes a candidate for application events enforced through data contracts.

Data Integrity and the Transactional Outbox

For Business Critical Data you’ll want to produce both event types for the same change in your system which leaves open a possible data integrity issue. As an example - consider when a customer cancels an order. In code, you update the `status` column of the order to `CANCELED` in the database, and then publish an `OrderCanceled` event to Kafka. But what happens if your Kafka cluster is down, and the `OrderedCanceled` event is never emitted? Now you have a data integrity issue - the order is canceled in your database, but your downstream consumers that depend on the `OrderCanceled` application event are not notified. It’s critical that either both events are produced, or neither are - this is where the Transactional Outbox pattern comes in! We’ll go into more details later on in the post, but the Transactional Outbox pattern takes advantage of your database’s transaction implementation by tieing both the entity and application event publishing to a transaction, giving us the desired all-or-nothing behavior and ensuring integrity between event types.

The high level of data integrity provided by using the transactional outbox pattern makes it a great fit for tracking Business Critical Data. While other tools on the market are excellent for front-end tracking where data loss is expected, events related to core business behavior generally require more guarantees around data delivery. I’ll talk more about using both transactional and non-transactional events a little later and when we decide to use each.

Implementation: Define, Enforce, Fulfill, and Monitor

The implementation of data contracts falls into four phases: defining data contracts, enforcing those contracts, fulfilling the contracts once your code is deployed, and monitoring for the semantic changes that unfortunately can’t always be caught prior to deployment. The diagram below lays out the full implementation of data contracts, from definition to deployment. We’ll break down the components of each piece of the architecture in more detail.

Application Event Contracts

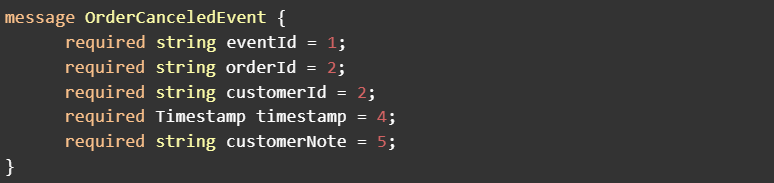

Just like entity contracts, defining contracts for events is done using well-established open-source projects for serializing and deserializing structured data. Two common projects are Google's Protocol Buffers (protobuf), and Apache Avro. Both provide an IDL (Interface Definition Language) allowing the schema of events (the contract) to be written in a language-agnostic format, which is then used to generate the code needed to serialize the event's payload prior to publishing to Kafka. As an example, here's a simple OrderCanceled application event-based data contract defined using protobuf.

Both projects provide options for adding extra metadata to contract definitions that can be used for things like annotating the contract with ownership and description metadata or specifying value constraints for fields (which will be covered more in-depth in part 3 of this series). Protobuf allows for custom options, and when defining a schema the Avro specification states “Attributes not defined in this document are permitted as metadata, but must not affect the format of serialized data.”

An advantage of using protobuf and Avro is that they have schemas baked into their core but using JSON to serialize messages is also an option. JSON Schema can be leveraged to declare schemas for JSON data which provides similar benefits to protobuf and Avro. Ultimately, teams should choose whatever serialization method they prefer but whatever is used, the messages should conform to well-defined schemas.

Data Contract Enforcement

A contract by definition requires enforcement. While agreed-upon semantics are more challenging to catch pre-deployment, a mechanism to prevent schema-breaking changes MUST exist. By making contract definition part of a service's code, we're able to validate and enforce contracts in our CI/CD pipeline as part of the normal deployment process. There are two steps to contract enforcement - making sure a service's code will correctly implement the defined contracts, and ensuring any changes made to a contract won't break existing consumers.

Compile Time Checks

Unlike entity events where the final event is produced indirectly from a stream processing job, application events are generated directly from application code. This makes enforcing correct contract schema implementation much easier! If you’re using a statically typed language (Java, C++, C#) you get this for free. If you don’t provide a value for a required field, or try to set a field to the wrong type, you’ll get a compile-time error.

For dynamically typed languages, there are often still ways of performing static type checking, but it takes a bit more effort. For example - Python added support for optional type annotations in PEP 484 and PEP 526, and (amazing!) projects such as mypy let you run static type checks as part of your build. There are even projects that help you bridge the gap between protobuf & Avro generated code, and mypy, such as the mypy-protobuf project.

Schema Compatibility

For the second step, we use the Confluent (Kafka) Schema Registry. Once we've verified the service code will correctly fulfill the defined contracts, we take the schemas of the entity contracts and use the production schema registry to check for backward incompatible changes. This is covered in more detail in our previous article below.

Finally, once all enforcement checks pass, we publish any new contracts, or new versions of an existing contract to the Schema Registry prior to deploying the code.

Data Contract Fulfillment

Fulfillment is the implementation stage where entity and application events differ the most. Unlike entity events where raw CDC topics are transformed into contract topics, application events represent a contract in its entirety (no abstraction layer needed) and have a single topic per contract. Application events can therefore be emitted to topics via the transactional outbox or, in some cases, emitted directly to the topic itself (non-transactional).

Non-Transactional vs Transactional Fulfillment

While not strictly required, you’ll almost definitely want to implement event publishing via the transactional outbox to give developers the option of ensuring data integrity for critical application events. Non-transactional events should be used for either high throughput Directional Data where a small amount of data loss is acceptable, or where there is no entity being updated (For example, if you don’t persist user searches anywhere in your database there’s no point in using transactional publishing to collect each search event). Transactional events should be used to publish Business Critical events that also coincide with an update to an entity, such as canceling an order.

One thing that’s very important to understand - whether using non-transactional or transactional event publishing, the outcome is identical (an event gets written to a Kafka topic). You should view transactional publishing as an implementation detail - it’s important to know when to use it, but at the end of the day you’re still just publishing an event.

Event Construction

Both Avro and protobuf will generate code that you can use to construct an object for your event. Using the above OrderCanceledEvent proto, constructing an OrderCanceledEvent object would look like the following;

Non-Transactional

Fulfillment of non-transactional events is pretty straightforward - you simply publish the event directly to the public contract topic.

Transactional

The transactional outbox pattern is implemented with the Debezium project used for CDC in the previous post. In this case, you’ll have a separate Debezium connector instance from the existing CDC connector that’s configured to route events from the `outbox` table in your database to the correct topic. I’m not going to rehash the implementation of the transactional outbox pattern in this article, as the Debezium team has already written a great post that you can take a look at. In our example, because we’re using protobuf, the payload column of the outbox table needs to store binary data.

The fulfillment of transactional events looks a bit different and is more nuanced. It’s critical that developers understand how database transactions work, and how to use them correctly when using transactional publishing. In the below example, in a single transaction we update the order row to set the status to canceled, and also write a OrderCanceledEvent row to the outbox table, all within the same transaction. If the transaction is committed both the Order row’s status is changed to canceled, and the OrderCanceledEvent gets published. If the transaction is rolled back, neither happens.

Publisher Interface

The above code is a step-by-step example of how to publish both transactional and non-transactional events. Realistically you’d want to abstract away those details, so you might end up with an interface like

In the second publish method, the Connection is used to create the prepared statement that inserts the row into the outbox table. It’s the caller’s responsibility to commit the transaction after calling the publish method.

Data Contract Monitoring

Like all software development, even with extensive testing bugs still slip through the cracks, and make it to production. The most difficult bugs to catch are often subtle changes in behavior that don’t immediately cause alarms to fire and data is no different. There are simply some aspects of semantic enforcement that cannot be reliably enforced prior to deployment. You enforce what you can by explicitly implementing things like value constraints, or using statistical analysis during testing and staging environments, but at the end of the day you still need good monitoring to alert you to changes in the semantics of your data. Part 3 of this series will focus on this - enforcement and monitoring of data contract semantics.

Thanks for reading, and good luck on your journey towards implementing data contracts. Remember to subscribe for Part 3!

-Adrian