The Rise of Data Contracts

And Why Your Data Pipelines Don't Scale

👋 Hi folks, thanks for reading my newsletter! My name is Chad Sanderson, and I write about data, data products, data modeling, and the future of data engineering and data architecture. In today’s article, we’ll be looking at one of my favorite subjects to write about: Data Contracts! What are they, what problems do they solve, and how do you use them. Please consider subscribing if you haven’t already, reach out on LinkedIn if you ever want to connect, and join our Slack community Data Quality Camp for practitioner-led advice on Data Contracts and Data Quality at scale!

Garbage In / Garbage Out

If you’ve worked in computer science, mathematics, or data for any amount of time you have probably heard the phrase, ‘Garbage In, Garbage Out (GIGO). GIGO refers to the idea that the quality of an output is determined by the quality of its inputs. It’s my favorite type of phrase: Simple and self-evident.

Yet despite near-universal head nodding wherever GIGO is mentioned, most modern data teams struggle with data quality at the source. Model-breaking pipeline failures, NULLs, head-scratching errors, and angry data consumers are a weekly occurrence with the data engineering organization often caught in the middle.

This issue is not isolated to early-stage data teams. I have spoken to enterprise-scale data organizations, from tech-first to tech-enabled companies, legacy businesses to high-profile Modern Data Stack consumers. In all cases, a failure to address GIGO seems to always rear its head.

My belief is that Data Contracts are the key to building a production-grade Data Warehouse and breaking the silo between data producers and data consumers. But what exactly is a data contract and why would you need one? In the spirit of #ProblemsNotSolutions, let’s start with understanding current the state of the world.

ELT, unintentional APIs, and the lack of consensual contracts

Today, engineers have almost no incentive to take ownership of the data quality they produce outside operational use cases. This is not their fault. They have been completely abstracted away from analytics and ML. Product Managers turn to data teams for their analytical needs, and data teams are trained to work with SQL and Python - not to develop a robust set of requirements and SLAs for upstream producers. As the old saying goes "If all you have is a hammer, every problem looks like a nail."

The Modern Data Stack has resulted in a reversal of responsibilities due to the distancing of service and product engineers from Analytics and Machine Learning. If you talk to almost any SWE that is producing operational data which is also being used for business-critical analytics they will probably have no idea who the customers are for that data, how it's being used, and why it's important.

While connecting ELT/CDC tools to a production database seems like a great idea to easily and quickly load important data, this inevitably treats database schema as a non-consensual API. Engineers often never agreed (or even want) to provide this data to consumers. Without that agreement in place, the data becomes untrustworthy. Engineers want to be free to change their data to serve whatever operational use cases they need. No warning is given because the engineer doesn’t know that a warning should be given or why.

This becomes problematic when the pricing algorithm that generates 80% of your company's revenue is broken after the column is dropped in a production table upstream. Yes, having monitoring in place downstream would be helpful to ensure the issue is at least caught quickly (no word on what happened that caused the failure though) but wouldn't it be an order of magnitude better if the column was never dropped in the first place, or the dataset was versioned so downstream teams could prepare themselves for a migration?

All of this manifests into something I call the GIGO Cycle:

The GIGO Cycle

1. Databases are treated as nonconsensual APIs

2. With no contract in place, databases can change at any time

3. Producers have no idea how their data is being used downstream

4. Cloud platforms (Snowflake) are not treated as production systems

5. Datasets break as changes are made upstream

7. Data Engineers inevitably must step in to fix the mess

8. Data Engineers begin getting treated as middle-men

9. Technical debt builds up rapidly - a refactor is the only way out

10. Teams argue for better ownership and a ‘single throat to choke’

11. Critical Production systems in the cloud (ML/Finance) fail

12. Blatant Sev1’s impact the bottom line, while invisible errors go undetected

13. The data becomes untrustworthy

14. Big corporations begin throwing people at the problem

15. Everyone else faces an endless up-hill battle

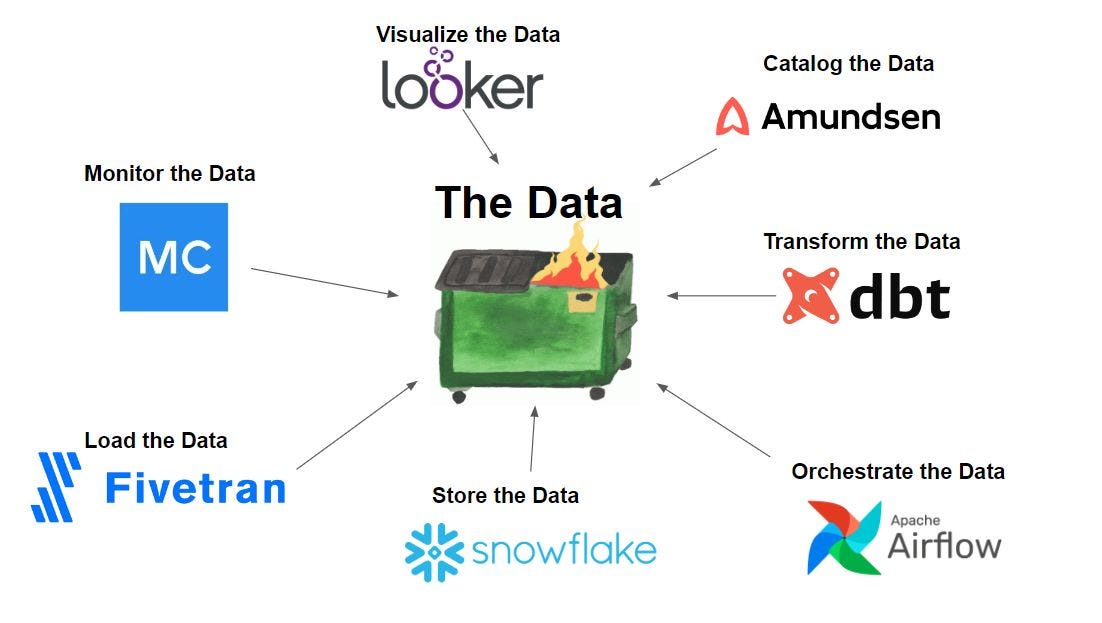

As core data problems continue to grow, tools are layered on top of this broken, untrustworthy architecture. While they might provide some respite in the chaos, the poor quality, lack of modeling, missing data, and fractured ownership create a chaotic and unscalable foundation.

Many companies who begin with 'simple' pipelines face a catastrophe of epic proportions as the tech debt mounts. At the most important time in an early stage data org's lifecycle (Pre/Shortly Post IPO) the data infrastructure can't answer some of the most critical questions the business has with credibility, ML/AI projects fail to deliver value, and critical datasets can’t be trusted.

The Solution: Data Contracts

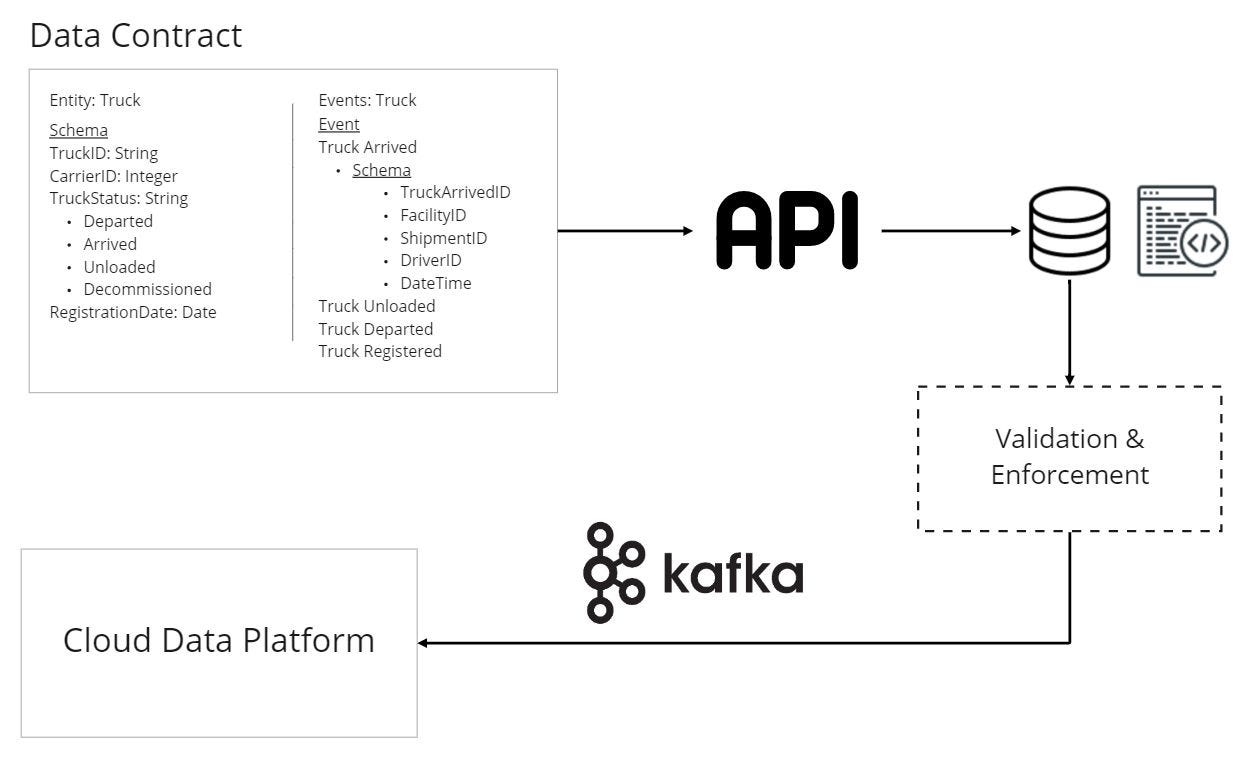

Data Contracts are API-like agreements between Software Engineers who own services and Data Consumers that understand how the business works in order to generate well-modeled, high-quality, trusted, real-time data.

Instead of data teams passively accepting dumps of data from production systems that were never designed for the purpose of analytics or Machine Learning, Data Consumers can design contracts that reflect the semantic nature of the world composed of Entities, events, attributes, and the relationships between each object.

This abstraction allows Software Engineers to decouple their databases/services from analytical and ML-based requirements. Engineers no longer have to worry about causing production-breaking incidents when modifying their databases, and data teams can focus on describing the data they need instead of attempting to stitch the world together retroactively through SQL.

As an example, let's imagine that my team owns a Machine Learning model which consumes point-in-time data once a shipment is finalized in order to predict the ideal bid for an auction.

My training set includes data from our Tenders service, Shipper tables, and data on our current market prices each contained in a different Posgres table. I expect that columns are NEVER dropped, that the values of each field ALWAYS remain the same, and that critical business logic (For example, there should be a 1:1 relationship between shipments and shipment oppurtunities). If any of the above occurs my model will break and will have a sizable production impact.

Contracts can be used to create an abstraction between operational data and other downstream consumers of data for analytical purposes. At Convoy, we do this by leveraging technologies similar to Protobuf (an internal IDL) and Kafka to provide an SDK for engineering teams to implement entity CRUD and service-based events as strongly typed schema.

This model allows engineers to emit the data that downstream teams require in the shape that it's needed. Data APIs are versioned with strong CI/CD and change management. Internal service details are not exposed to the greater organization allowing for personal flexibility. Data consumers define the schema and properties they NEED, instead of being forced to accept what's coming from production even if it is of low quality or missing critical components for their use cases. As a pleasant by-product, this strongly enforced schema with clear semantic meaning paves the way towards an event-driven architecture (but more on that another day).

What about my existing ELT/CDC Pipeline?

A question I often get when talking about data contracts is ‘what happens to my existing pipelines? Do they go away?” In my opinion, NO.

There are two-ever present use cases for data in the Lake/Lakehouse:

1. Data teams must explore the data we have to understand how best to use it. This is ideal for the existing Modern Data Stack, which places a focus on speed, flexibility, and iteration. Once there is some level of belief in the data and what it means, the data team is ready to create a production pipeline.

2. The production pipeline has an explicitly clear use case. It powers a training set for an ML model, is used for financial reporting, or drives key company dashboards. The data must be well modeled, conform to some fact of the matter about the world, and requires an explicit contract from producers. That contract requires producers to inform consumers if things change and to generate an abstraction that decouples operational use cases from analytics use cases.

Production pipelines are surfaced as trustworthy, high-quality, well-owned data supported through an engineering on-call and clear semantic documentation. It can be leveraged by anyone else in the business and iteratively developed over time.

These two use cases form a lifecycle of discovery, MVP pipeline development, validation, and eventual production quality deployment that is reminiscent of the development workflow of any other feature leading to true data product development.

Frequently Asked Questions

Q: Do data contracts require consumers to know all the data they need in advance?

A: No. The abstraction layer between services and consumers means that the contract can change over time, iteratively, in a way that is backward compatible. This allows teams to drive business needs fast but paves the way for high-quality modeling in the future.

Q: Are producers expected to have multiple data contracts per consumer?

A: No. Producers own a single contract for each entity (CRUD) or semantic event, which is leveraged by every consumer. Consumers make requests to modify these contracts as their requirements grow over time.

Q: Isn’t this a lot to ask from our software engineers?

A: In my experience, no. As long as engineers are given a simple-to-use interface for implementing contracts, protecting themselves and the business from production incidents is high-value, low-effort work. Great ROI!

Q: Traditionally our data producers are reluctant to make enhancements for analytics use cases. How does this change things?

A: No SWE wants to update their databases with non-essential information on-demand! This abstraction provides a vehicle they can use to fulfill those requests simply. Secondly, I recommend implementing column-level lineage for source tables to provide a mechanism for your SWEs to understand the downstream assets they are impacting with every database change. It’s hard to take accountability without awareness.

Q: How could I create something like this?

A: Be on the lookout for an article from the official Convoy Tech blog soon! We’ll be talking at length about how we implemented Data contracts using open source tools.

Q: Could all of this be handled through better data architecture?

A: Yes and no. One of the core challenges in modern tech companies today is that data architecture is sacrificed at the expense of rapidly moving software iteration. After years of accumulating data debt, the cost to fully refactor an architecture is challenging to say the least. Data Contracts are a vehicle to iteratively build core data pipelines following a well-modeled design. It facilitates the Agile development of the data model.

Q: Where should I start with Data Contracts?

A: I’d recommend starting with the most recent data-centric Sev1 or Sev2. Clearly define the upstream source data, review the contract design, create an SLA, and implement contracts for a single impactful use case. Make sure the data team is brought on board!

If you have any other questions feel free to ask below or connect with me on LinkedIn! If you enjoyed the article please subscribe and leave a like, I’d really appreciate it.

-Chad

How to implement Data Contracts

Want to learn how to implement data contracts? Check out the post below for a step by side to getting started.

I like the idea of data contract in theory. It is always good to have layers of abstractions. But it feels like another way for some companies to sell services and products. How do we know if this won't be another item in our growing data portfolio we will need to maintain?

And we were supposed to fix this with data governance work and data management tools. Then we had data mesh and data product idea. What happened to those? It reminds me of that xkcd comic where we create another standard to fix everything (https://xkcd.com/927/)!

Using your example, if we have strongly typed schema, event based architecture, processes and policies in place why do you need a contract? Isn't contract implicit with SLA and internal procedures? Are we just putting an API on top this to make this fancy?

I like the idea that this is machine-readable and could be handled via API but I believe the problem is social and cultural, not technical.

"Data consumers define the schema and properties they NEED, instead of being forced to accept what's coming from production even if it is of low quality or missing critical components for their use cases."

This was suppose to be fixed by GraphQL, right?

Anyway, good idea. But I am bit skeptical.

Thanks for writing this!

Having a background of backend development and microservices, I love the idea of Data Contracts. But whenever we have discussed this at work for our current setup we don't find a good solution to implement this on our current setup. More especifically:

- Airflow batch pipelines that fetch snapshots directly from the DB. If the contract doesn't match the operational DB schema, is the Product team responsible to implement the transformation step? How? With airflow itself?

- ELT pipelines and integration with tools such as Fivetran/Data Transfer/GA which implement ingestion directly into the DWH. How could a contract be placed in between?